An early overview of ICLR2018 (part 3)

31 Jan 2018Finally, decisions for the ICLR2018 conference are out! Congratulations to those who got their papers accepted, and I hope reviews serve as a source of inspiration for those who got their papers rejected.

Now that we have the acceptance decisions and author names have been disclosed, let’s play with data to find interesting papers, who are influential researchers, and which are the most significant organizations for the 2018 edition of this great conference :)

An analysis of the submission process can be found in part 1, and for the review process see part 2.

ICLR 2018 is growing fast. The program committee has officially stated that 935 conference submissions were presented, 505 more than last year (430 submissions on 2017). From these 935, 23 have been accepted for oral (2%), and 314 for poster (34%).

I have gathered the exact data which is summarized in the following plot:

Not surprisingly, this year the top three most frequent keywords have been deep learning,

reinforcement learning, and neural networks, but which are the topics that

have been most successful this year?

As it can be seen meta-learning, exploration, model compression,

adversarial examples, variational inference are the hottest topics this

year. For instance, 85% of the papers with the keyword exploration were accepted,

while classification and cnn only show 12% acceptance rate. Curiously, submissions

with Convolutional Neural Networks have higher acceptance rates than with cnns.

As for the submission scores, it is interesting to see there are submissions accepted as Poster with a mean ~8 and an Oral submission with a mean score of ~6. To find these exact instances, the following table with all the submissions can be used:

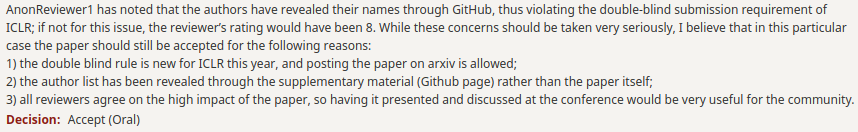

Browsing, I found that Progressive Growing of GANs for Improved Quality, Stability, and Variation was accepted as Oral with a mean score of 5,67. The exact reviews were (8, 1, and 8). However, the 1 was due to a concern with the anonymization of the data. Finally, the decision was to overlook it for the following reasons:

In fact, most of the orals have one of their scores equal or greater than 8. The only exception is Beyond Word Importance: Contextual Decomposition to Extract Interactions from LSTMs, for which the review discussion lead to Reviewer3 to raise the score:

Explanations are convincing, I revise my rating.

- AnonReviewer3

Another borderline case is Distributed Fine-tuning of Language Models on Private Data, which has been accepted as poster with a mean score of 4,36 (4, 4, 5). In this case, reviewers were concerned about the simplicity and novelty of the paper, but the meta-reviewer found those concerns not strong enough to trigger a rejection. More cases can be found where the acceptance or rejection differs from the reviews, especially on the borderlines, for which having an understanding meta-review can superimpose the reviews.

This makes one think about the influence of luck during the decision processes. Is publishing a matter of statistics? We can make a plot of the submissions per author to see if publishing is a matter of quantity or quality:

It seems that, usually, the probability of acceptance is around 50%. However, I beg the reviewer not to draw immediate conclusions because the reality is far more complex and most of the authors in the graph are not always the first authors of the papers. In fact, most of the first authors do not appear in the graph because lots of them only submitted one manuscript.

This also raises the question of which are the most prolific organizations:

Google again ;) takes the lead, with

their researchers appearing in 121 submissions (counted 97 last year)! This year

Carnegie Mellon has taken over Berkeley, and Microsoft has grown too. It is funny

to see organizations such as socher.org, referring to the e-mail of Richard Socher.

Although there exists competition between organizations, collaboration is key to success. This is accounted in the following graph (best viewed on computer):

As it can be seen: Google, Microsoft, Carnegie Mellon, and Berkeley are the most connected organizations, this coincides as well with the number of submitted and published papers.

This is the last post from the ICLR2018 overview series. I hope you enjoyed reading them as much as I have enjoyed writing and fiddling with the data. If you found any imprecision, do not hesitate to notify me.

Acknowledgements

Thanks to @pepgonfaus for reviewing and encouraging me to write this series of posts.