An early overview of ICLR2019

07 Oct 2018

This year’s venue will be held on May 6-9 in New Orleans. No big changes with respect to the last edition, except for the Workshop track, which will be held in small concurrent events, with a separately chaired process.

Anyway, let’s take a look to ICLR2019 reviews! For those who like to browse data

themselves, here is the table with all the submissions and scores weighted

by reviewers’ confidence ;)

Top-10 rated papers

So the top 10 best scored reviews are:

| # | Title | Authors | Rating | Std |

| 1 | Benchmarking Neural Network Robustness to Common Corruptions and Perturbations | Anon | 9.0 | 0.0 |

| 2 | Sparse Dictionary Learning by Dynamical Neural Networks | Anon | 8.5 | 0.5 |

| 3 | KnockoffGAN: Generating Knockoffs for Feature Selection using Generative Adversarial Networks | Anon | 8.5 | 1.5 |

| 4 | Large Scale GAN Training for High Fidelity Natural Image Synthesis | Anon | 8.5 | 1.2 |

| 5 | GENERATING HIGH FIDELITY IMAGES WITH SUBSCALE PIXEL NETWORKS AND MULTIDIMENSIONAL UPSCALING | Anon | 8.4 | 1.4 |

| 6 | Variational Discriminator Bottleneck: Improving Imitation Learning, Inverse RL, and GANs by Constraining Information Flow | Anon | 8.2 | 1.6 |

| 7 | ALISTA: Analytic Weights Are As Good As Learned Weights in LISTA | Anon | 8.2 | 1.6 |

| 8 | Ordered Neurons: Integrating Tree Structures into Recurrent Neural Networks | Anon | 8.1 | 0.8 |

| 9 | Slimmable Neural Networks | Anon | 8.1 | 0.8 |

| 10 | Posterior Attention Models for Sequence to Sequence Learning | Anon | 8.0 | 0.8 |

The first impression is that scores are lower this year, let’s check the score histogram:

Indeed, scores are lower and the average score has lowered from 5.4 to 5.1 this year (5.7 in 2017). Given that last year’s 36% acceptance rate, this year’s cut score should be around 5.5.

What about the most controversial papers?

Top-5 controversial papers

These are the submissions with the higher discrepancy between the reviewers. A clear

example is Large-Scale Visual Speech Recognition,

where the authors construct a large scale dataset for lip reading and propose

a pipeline that outperforms previous approaches, as well as human lipreaders.

In a review titled “Engineering Marvel” with a score of 3/10 and confidence

of 5, Reviewer1 claims there is no novelty and provides a list of issues.

Differently, Reviewer3, rates the submission with a 9/10 and confidence of 4,

arguing that the submission is useful for the research community, since it

provides a large dataset and a strong baseline.

| # | Title | Authors | Std | Min | Max |

| 1 | Large-Scale Visual Speech Recognition | Anon | 3.0 | 3 | 9 |

| 2 | Invariant and Equivariant Graph Networks | Anon | 2.5 | 4 | 9 |

| 3 | An adaptive homeostatic algorithm for the unsupervised learning of visual features | Anon | 2.5 | 4 | 9 |

| 4 | Per-Tensor Fixed-Point Quantization of the Back-Propagation Algorithm | Anon | 2.5 | 3 | 8 |

| 5 | Unsupervised Neural Multi-Document Abstractive Summarization | Anon | 2.5 | 3 | 9 |

Now, we know the top rated and controversial submissions, as well as the possible cut score, but which is the cut topic? Are some topics better rated than others?

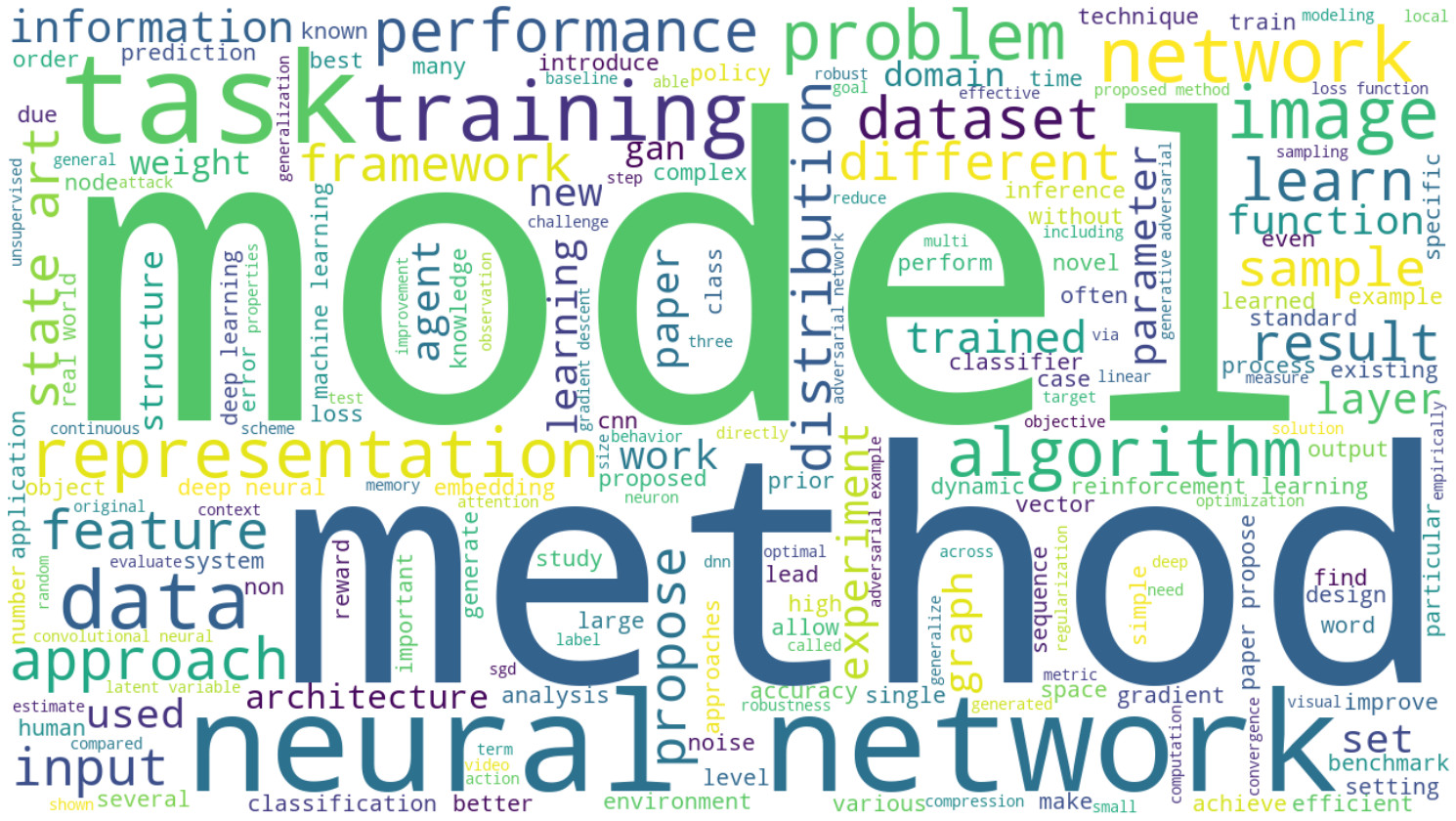

Keywords

We can take a look at the keywords and how they are rated:

This is the rating histogram of the top-25 keywords. Optimization and variational inference are the most valued keywords, while machine learning, and interpretability are the worst rated ones.

Reviewer’s confidence

Similarly to last year, confident reviewers tend to produce more extreme scores. In fact, not only confidences affect extreme ratings, but also the review deadline:

Surprisingly, this year most of the rejects concentrate on the last days. This is different from ICLR2018, when most of them where concentrated at the beginning.

However, no surprise on the fact that most reviews where submitted on the last days:

That’s all for now. Once the decisions are out, I will update the post with the new information.

About the data

The data was obtained from openreview, using their library.